- publication

- ACM Transactions on Graphics

- authors

- Kaan Yücer, Alexander Sorkine-Hornung, Oliver Wang, Olga Sorkine-Hornung

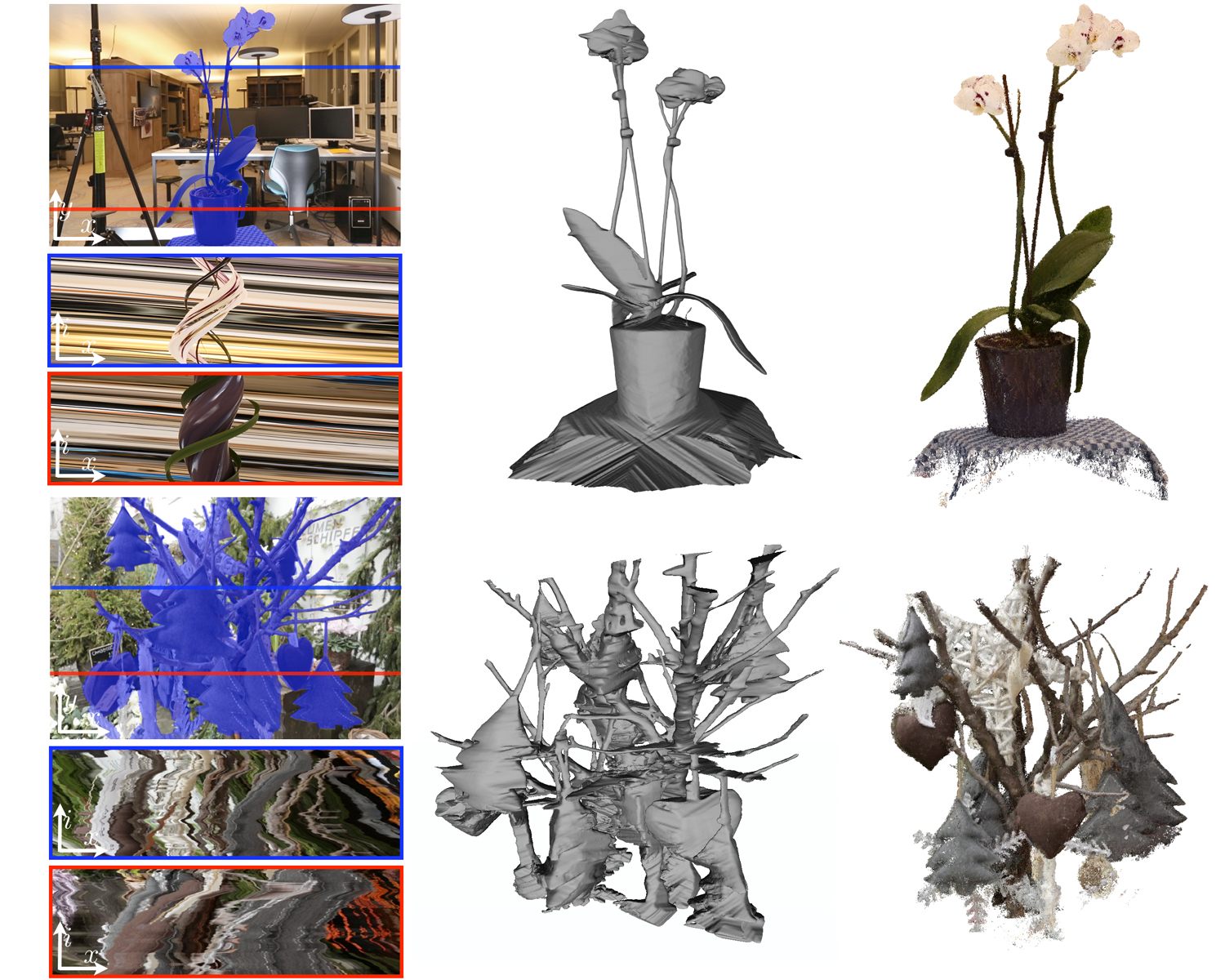

Example results of our method captured with a rotating (top) and a hand-held (bottom) camera. Slices of densely sampled light fields reveal continuous structures that arise as a result of motion parallax (blue and red rectangles). Our method leverages the coherency in this data to accurately segment the foreground object in both 2D (blue image mask) and 3D (mesh), which can be used to aid 3D reconstruction algorithms (colored point clouds).

abstract

Precise object segmentation in image data is a fundamental problem with various applications, including 3D object reconstruction. We present an efficient algorithm to automatically segment a static foreground object from highly cluttered background in light fields. A key insight and contribution of our paper is that a significant increase of the available input data can enable the design of novel, highly efficient approaches. In particular, the central idea of our method is to exploit high spatio-angular sampling on the order of thousands of input frames, e.g. captured as a hand-held video, such that new structures are revealed due to the increased coherence in the data. We first show how purely local gradient information contained in slices of such a dense light field can be combined with information about the camera trajectory to make efficient estimates of the foreground and background. These estimates are then propagated to textureless regions using edge-aware filtering in the epipolar volume. Finally, we enforce global consistency in a gathering step to derive a precise object segmentation both in 2D and 3D space, which captures fine geometric details even in very cluttered scenes. The design of each of these steps is motivated by efficiency and scalability, allowing us to handle large, real-world video datasets on a standard desktop computer. We demonstrate how the results of our method can be used for considerably improving the speed and quality of image-based 3D reconstruction algorithms, and we compare our results to state-of-the-art segmentation and multi-view stereo methods.

downloads

- Paper (ACM Transactions on Graphics, official version available at http://portal.acm.org/)

- Video

- BibTex entry

accompanying video

data sets

Please cite the above paper if you use any part of the images or results provided on the website or in the paper. For questions or feedback please contact us.

- africa (2.2 GB)

- basket (2.9 GB)

- decoration (2.2 GB)

- dragon (17.1 GB)

- orchid (6 GB)

- plant (4 GB)

- scarecrow (4.5 GB)

- ship (7 GB)

- statue (4.5 GB)

- thin plant (4.9 GB)

- torch (1.8 GB)

- trunks (2.6 GB)

acknowledgments

We are grateful to Changil Kim for his tremendous help with generating the results and comparisons. We would also like to thank Maurizio Nitti for rendering the Dragon dataset, Derek Bradley for the discussions on MVS, Joel Bohnes for helping with the figures, Benjamin Resch for helping with the camera calibrations, and Guofeng Zhang for helping with generating the ACTS results.